Effectiveness Versus Efficacy:

More Than a Debate Over LanguageThis section was compiled by Frank M. Painter, D.C.

Send all comments or additions to: Frankp@chiro.org

FROM: J Orthop Sports Phys Ther 2003 (Apr); 33 (4): 163–165 ~ FULL TEXT

Julie M. Fritz, PT, PhD, ATC, Joshua Cleland, PT, DPT, OCS

Department of Physical Therapy,

University of Pittsburgh,

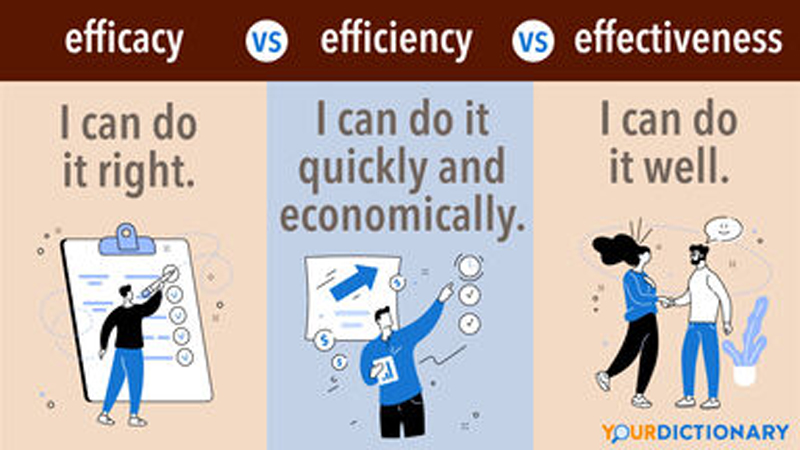

Pittsburgh, PA.As the physical therapy profession continues the paradigm shift toward evidencebased practice, it becomes increasingly important for therapists to base clinical decisions on the best available evidence. Defining the best available evidence, however, may not be as straightforward as we assume, and will inevitably depend in part upon the perspective and values of the individual making the judgment. To some, the best evidence may be viewed as research that minimizes bias to the greatest extent possible, while others may prioritize research that is deemed most pertinent to clinical practice. The evidence most highly valued and ultimately judged to be the best may differ based on which perspective predominates. One issue that highlights the importance of perspective in judging the evidence is the difference between efficacy and effectiveness approaches to research. These terms are frequently assumed to be synonyms and are often used incorrectly in the literature. There is actually a meaningful distinction between efficacy and effectiveness approaches to research. The distinction is not merely a pedantic concern within the lexicon of researchers, but impacts the nature of the results disseminated by a study, how the results may be applied to clinical practice, and finally how the results are judged by those who seek to evaluate the evidence. [5] Understanding the contrast between effectiveness and efficacy has important and very practical implications for those who seek to evaluate and apply research evidence to clinical practice.

Studies using an efficacy approach are designed to investigate the benefits of an intervention under ideal and highly controlled conditions. While this approach has many methodological advantages, efficacy studies frequently entail substantial deviations from clinical practice in the study design, including the elimination of treatment preferences and multimodal treatment programs, control of the skill levels of the clinicians delivering the intervention, and restrictive control over the study sample. [3, 13] The preferred design for efficacy studies is the randomized controlled trial, frequently employing a no-treatment or placebo group as a comparison in order to isolate the effects of 1 particular intervention. [7] Studies using an efficacy approach have high internal validity and typically score highly on scales designed by researchers to evaluate the quality of clinical trials. However, the generalizability of the results of efficacy studies to the typical practice setting has been questioned. [2] In clinical practice, therapists tend to use many different interventions within a comprehensive treatment program and, therefore, studies investigating the effects of an isolated treatment may appear less useful. In addition, clinical decision making typically entails choices between competing treatment options and, therefore, studies comparing an intervention to an alternative of no intervention (or a placebo intervention) may not seem as directly applicable to the process.

An example of a study using an efficacy approach is a randomized trial by Hides et al. [6] This study examined the effects of exercise on patients with low back pain (LBP). The study used a highly standardized exercise program specifically designed to isolate and strengthen the multifidus muscle. The patient population was restricted to patients with a first episode of unilateral LBP less than 3 weeks in duration. The exercise program was compared to a control group that received no intervention other than medication and advice to remain active. The results of the study favored the group receiving the exercises. The study design permits the conclusion that training the multifidus is beneficial for patients with LBP (versus doing no exercise), however, the generalizability of the results might be questionable. Relatively few patients seen by physical therapists will be experiencing a first episode of LBP that is less than 3 weeks in duration. Furthermore, therapists may not be as highly trained as the authors of the study in the particular exercise techniques used. Finally, it cannot be determined if the multifidus training program would be better than an alternative exercise program. A physical therapist may choose to utilize this treatment based on the results of the study by Hides et al, [6] however, for all these reasons, the favorable results found in the study may not generalize to the therapist’s own practice

Studies using an effectiveness or pragmatic approach seek to examine the outcomes of interventions under circumstances that more closely approximate the real world, employing less standardized, more multimodal treatment protocols, more heterogeneous patient samples, and delivery of the interventions in routine clinical settings. [13] Effectiveness studies may also use a randomized trial design, however, the new treatment being studied is typically compared to treatment using the standard of practice for the patient population being studied. [1] Studies using an effectiveness approach tend to sacrifice some degree of internal validity, but have high external validity and are viewed as more applicable to everyday clinical practice. [2]

The distinction between efficacy and effectiveness studies should be viewed as a spectrum, not a strict dichotomy. Many studies have characteristics of both, however, studies of treatment outcomes can generally be described as taking either an efficacy or effectiveness approach. Efficacy studies, with their more stringent control over treatment conditions, tend to be viewed more favorably by researchers and have generally been judged more favorably in the review criteria established by researchers to grade the evidence. For example, many systematic reviews and evidence-based clinical practice guidelines (EBCPG) will include only randomized controlled trials, and some will only include studies with a comparison group that receives no treatment or a placebo intervention. [4, 8, 10]

The exclusion of effectiveness studies can impact the results and recommendations of a systematic review or EBCPG. For example, the Philadelphia Panel recently developed EBCPG for a number of interventions commonly used by physical therapists for the management of low back, neck, knee, and shoulder pain. The Panel reported that a limitation of previously developed EBCPG was the inclusion of studies that did not use a no-treatment or placebo comparison group. [10] The Panel therefore limited their review to studies with a comparison group that received “placebo, no treatment, or one of the interventions of interest.” [10]

The impact of excluding trials that utilized more of an effectiveness approach can be illustrated in the Panel’s guidelines for therapeutic exercise in the management of chronic LBP. [11] The Panel excluded a randomized trial by O’Sullivan et al [9] that compared a program of stabilizing exercises with a comparison group that received treatment as usual, including heat, ultrasound, massage, and a general exercise program. The study was excluded because the comparison group did not meet the Panel’s inclusion criteria. The results of the effectiveness study by O’Sullivan et al [9] were quite favorable to the group receiving the stabilization training in both the short and long term. From the perspective of the researchers constructing the EBCPG, the comparison group used in the study was inappropriate and threatened the internal validity of the research. From a clinical perspective, however, the use of a usual-care comparison group may have more meaning than a no-treatment group. Demonstrating that a new intervention is superior to a standard treatment may be more likely to change practice behavior than showing that a new intervention is superior to no treatment at all.

Other systematic reviews have included the study by O’Sullivan et al [9] and graded it as a high-quality study. [12] Without the inclusion of this study, the Panel’s EBCPG recommended “stretching, strengthening, and mobility exercises” [10] for patients with chronic LBP. While these recommendations are useful and supportive of physical therapy interventions for patients with chronic LBP, it appears that the Panel may have missed an opportunity to provide more specific guidelines to therapists, such as the specific stabilizing program used by O’Sullivan et al, by excluding studies using an effectiveness approach.

Physical therapists seeking to practice in an evidence-based manner need to recognize that effectiveness and efficacy are not interchangeable terms, but have precise meanings as they apply to research. The distinction between these terms has practical implications when determining how evidence is judged. Currently, the bias leans in favor of studies using an efficacy approach, however, this may be changing in many disciplines. The clinical applicability of studies using an effectiveness approach is beginning to be considered more favorably by some who construct evidence-based guidelines. We believe this trend should continue, and is likely to improve the generalizability of guidelines and ultimately improve outcomes of everyday clinical practice.

References:

Arean PA, Alvidrez J.

Ethical considerations in psychotherapy effectiveness research:

choosing the comparison group.

Ethics Behav. 2002;12(1):63–73.Goldfried MR, Wolfe BE.

Toward a more clinically valid approach to therapy research.

J Consult Clin Psychol. 1998;66(1):143–150.Guthrie E.

Psychotherapy for patients with complex disorders and chronic symptoms.

The need for a new research paradigm.

Br J Psychiatry. 2000;177:131–137.Guyatt GH, Sackett DL, Cook DJ.

Users’ guides to the medical literature. II. How to use an article

about therapy or prevention. A. Are the results of the study valid?

Evidence-Based Medicine Working Group.

JAMA. 1993;270(21):2598–2601.Helewa A, Walker JM.

Critical Evaluation of Research in Physical Therapy Rehabilitation:

Towards Evidence-Based Practice.

Philadelphia, PA: WB Saunders Co.; 2000.Hides JA, Jull GA, Richardson CA.

Long-term effects of specific stabilizing exercises for

first-episode low back pain.

Spine. 2001;26(11):E243–E248.Klein DF.

Preventing hung juries about therapy studies.

J Consult Clin Psychol. 1996;64(1):81–87.Meade MO, Richardson WS.

Selecting and appraising studies for a systematic review.

Ann Intern Med. 1997;127(7):531–537.O’Sullivan PB, Phyty GD, Twomey LT, Allison GT.

Evaluation of specific stabilizing exercise in the treatment

of chronic low back pain with radiologic diagnosis of spondylolysis or spondylolisthesis.

Spine. 1997;22(24):2959–2967.Philadelphia Panel.

Philadelphia Panel evidence-based clinical practice guidelines on selected

rehabilitation interventions: overview and methodology.

Phys Ther. 2001;81(10):1629–1640.Philadelphia Panel.

Philadelphia Panel evidence-based clinical practice guidelines on selected

rehabilitation interventions for low back pain.

Phys Ther. 2001;81(10):1641–1674.van Tulder M, Malmivaara A, Esmail R, Koes B.

Exercise therapy for low back pain: a systematic review within the

framework of the cochrane collaboration back review group.

Spine. 2000;25(21):2784–2796.Wells KB.

Treatment research at the crossroads: the scientific interface

of clinical trials and effectiveness research.

Am J Psychiatry. 1999;156(1):5–10.

Return to ABOUT CHIROPRACTIC RESEARCH

Since 9-01-2022

| Home Page | Visit Our Sponsors | Become a Sponsor |

Please read our DISCLAIMER |